Here is our training data as below plot

[Plot 1]

If we fit a linear function $\theta_0 + \theta_1 x$ to this data as below plot

[Plot 2]

If we use a quadratic function $\theta_0 + \theta_1 x + \theta_2 x^2$, then this function fit this data well.

[Plot 3]

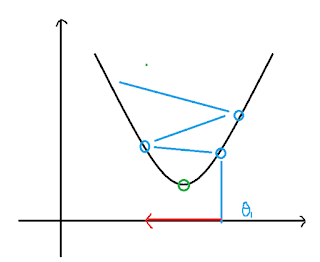

And if we fit a fourth polynomial $\theta_0 + \theta_1 x + \theta_2 x^2 + \theta_3 x^3 + \theta_4 x^4$ to the data, then it's maybe look like this plot

[Plot 4]

Although, it fit the data very well, but it's not make any sense about the relationship between house price and size, this problem we called overfitting and high variance. We don't have enough data to constrain it to give us a good hypothesis.

Overfitting:

If we have too many features, the learned hypothesis may fit the training data very well, but fail to generalize to new examples.

Options:

- Reduce number of features

- manually select

- model selection algorithm

By throwing away some of features, it throw away some information at the same time.

- Regularization ,link: regularization

- Keep all the features, but reduce the magnitude / value of parameters

- Each will contribution a bit to predict y