[Ex]

\begin{array}{llll}

\hfill\mathrm{Size~in~feet^2 (x_1)}\hfill &

\hfill\mathrm{\#~bedrooms(x_2)}\hfill &

\hfill\mathrm{\#~ floors(x_3)}\hfill &

\hfill\mathrm{Age(x_4)}\hfill &

\hfill\mathrm{Price~$1000~(y)}\hfill

\\ \hline

\\ 2104 & 5 & 1 & 45& 460

\\ 1416 & 3&2&40&232

\\ 1534 & 3&2&30&315

\\ 852 & 2&1&36&178

\\ ... & ...& ...& ...& ...

\\ \end{array}

Notation:

- n = number of variables

- m = number of examples

- \(x^{(i)}\) = input variables of \(i^{th}\) training example.

- \(x^{(i)}_j\) = value of input variable j in \(i^{th}\) training example.

Hypothesis: \( h_\theta(x) = \theta_0 + \theta_1 x_1 + \theta_2 x_2 + \theta_3 x_3 + \theta_4 x_4\)

For convenience of notation, define \(x_0 = 1\), it means \( x^{(i)}_0 = 1\), so the hypothesis can transfer as:

$h_\theta(x) = \theta_0 x_0 + \theta_1 x_1 + \theta_2 x_2 + \theta_3 x_3 + \theta_4 x_4 $

$ = \theta^T x $

So, the definition is as below:

[Def]

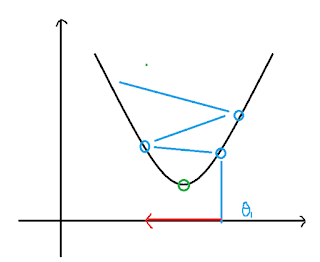

$$\begin{align*}& \text{repeat until convergence:} \; \lbrace \newline \; & \theta_j := \theta_j - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_j^{(i)} \; & \text{for j := 0...n}\newline \rbrace\end{align*}$$

So, the definition is as below:

[Def]

$$\begin{align*}& \text{repeat until convergence:} \; \lbrace \newline \; & \theta_j := \theta_j - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_j^{(i)} \; & \text{for j := 0...n}\newline \rbrace\end{align*}$$